navigating the "AI wars"

mission statement:

the cultural war thingy around generative AI is very interesting & frustrating to me. it's something i've had complicated feeling about for a long time, & i often feel like there is no space for me to speak online about my views. the AI discussion online is is extremely polarized right now. i've been reading a lot about social media, & polarization is a feature, not a bug.

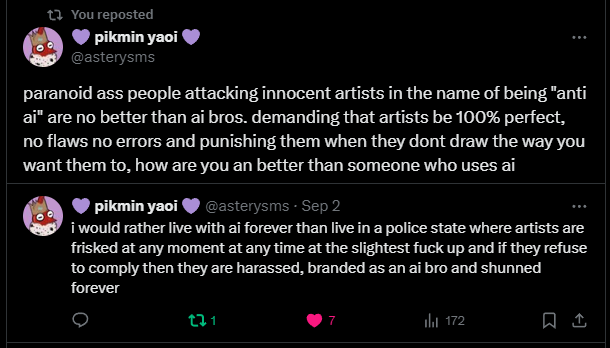

the moral panic about AI has been making people act really weird. over the last few months, some of my favorite artists have been accused (or investigated or whatever) of using AI with flimsy evidence. people have decided it's normal to demand "proof" from artists in the form of sketches, layers, or speedpaints/timelapses. when the artists stand up for themselves or refuse to comply, it's seen as an admission of guilt, basically. the most recent example i saw of this, someone made an entire callout thread for an artist, circling all over several pieces of their artwork, drawing attention to flaws & style inconsistencies as evidence.

i think there's something very sad about the fact that we’re now using the inherently human trait of making mistakes as evidence that something is generated by AI. that, to me, feels like a loss of faith in humans. this person on twitter summed up my thoughts very well:

and that really only illustrates one facet of what's so frustrating about how these conversations have been playing out online for the last couple of years. i've been trying to decide where i stand in the AI debate dot com. it's funny, because someone on Bluesky added me to a list called "AI Libertarians." that's not at all accurate to how i look at it, because i think gen AI needs to be regulated to hell and back, which i'm pretty sure is the opposite of a libertarian stance. i'm not "anti AI," but i' m not "pro AI" either. i think the best way to describe my perspective is that i'm "AI conscious."

one day i'll have a better explanation of what i mean, but for now, i guess the best way for me to describe what "AI conscious" means to me is that i am critical of genAI, while also embracing the technology to a degree. i'm extremely aware of the ethical concerns, & i advocate for a more ethical, regulated use of this technology. i'm against the "AI arms race" between all these corporations & i hope the AI bubble crashes & burns. i'm very scared about what a future where this tech goes unregulated looks like.

but i also feel there's also a bit of alarmism in the conversation. i don't think it's helpful or appropriate to harass people who do use AI, whether they do create AI-generated art or use LLMs or whatever. & it's certainly not appropriate to harass people because they might be using AI.

basically, it's a complicated situation & i think nuance is lacking in almost all of the discussions i see online. my goal here is to provide some more nuance. i also want to understand genAI better. as the "No AI" sentiment has grown over the last few years, the amount of misinformation on the subject has been hard to sift through. as i find resources helpful to understanding genAI, i will share them here. i'm hoping for a future where the discussion around genAI is more nuanced, more constructive, & more focused on critical thought instead of knee-jerk emotional reactions.

my AI library

my AI timeline

by ME ^_^

this is a side-project of mine where i'm trying to chart the technological advances, public opinion, etc. of generative AI from its inception to the current day. currently on 2019. (it's a WIP ;D)

Meet the Five Schools of Thought Dominating the Conversation about AI

by a bunch of dudes (June 2023)why i think it's important: i mostly refer people to this article a lot because i find the fifth school of thought, "Global Governista," does the best at explaining my philosophical stance toward AI. i also think it's just a good primer to this discussion in general

Are We Art Yet? (AWAY)

why i think it's important: AWAY collective describes itself as "a coalition of artists working to bridge the divide between traditional, digital, and AI creators." i think this is super important, because it promotes a collaborative and ethical approach to using AI, ensuring that technological advancements enhance instead of undermine the work of traditional artists. i think this is a good step toward decreasing the polarizaton in the AI discussion.

CommonCanvas: An Open Diffusion Model Trained with Creative-Commons Images

why i think it's important: the idea of training an AI model on only creative commons images is so cool to me. the biggest ethical issue i have with gen AI is that living creators' copyrighted works were used to train the models without their consent. Common Canvas only being trained on Creative Commons images is a step in the right direction for more ethical AI use in my opinion, & i'm hoping that this trend can continue. i've been using CommonCanvas to make some weird stuff.

Spawning.ai

why i think it's important: Spawning is so cool to me. they aim to create a comprehensive solution for both rights holders & AI devs, making sure that data preferences are respected across the "AI ecosystem". they're responsible for making the "have i been trained?" program & Kudurru, which you can use to block scrapers from accessing your siste's content. they've been promoting the "opt-out" ability for artists to opt out from their works being trained in the new stable diffusion model. again, i think this is a step in the right direction. ideally we'd be talking about artists opting IN & instead of having to opt out, but i think this is still one step closer to making sure artists can actually consent to having their work used to train AI. you can follow Spawning on twitter

Fact: There is no effective way to ban or limit the use of AI.

(reddit)why i think it's important: this is kind of a placeholder link, because right now, i can't find any good ~professional~ or academic sources that go into how difficult it would be to completely ban gen AI due to the whole... open source software element of it. i see a lot of people advocate that "generative AI should be banned" & i don't think they understand how Sisyphean that task would be, lol. that's why i strongly push for regulation. in my opinion, regulation is more feasible & effective than any attempts to ban AI. (if you happen to find a better source for how difficult it would be to do a blanket, global ban on AI, let me know!)

Artisanal Intelligence: what's the deal with AI art

by Pol Clarissou (February 2023)why i think it's important: this post is really important to me, but it's hard for me to summarize it succintly. i think the most important takeaways i got from this post are the critique of the idea that art has a "soul," the warning that trying to protect artists from AI through stricter IP laws are misguided & may actually end up hurting smaller artists, & the call for artists to resist the commodificaton of art itself. i like the idea of art being part of a collective culture instead of private property. there's a lot of really good stuff here, if u have the time to read it all.

quote: For all intents and purposes, art produced for the market is already procedurally generated by market forces. The process of selection is at play at both the inception end (by selecting which projects get funding, and even prior, which creators and modes of production are allowed to thrive in the industry) and at the distribution end (through competition between pieces - which is a matter of marketing and platform penetration much more than any kind of metric of artistic merit, if such a thing really existed in the abstract). Can the capitalist market not be described as an analog algorithm optimized for the maximum production of abstract capitalist profit without concern for any other metric? Now that's the kind of rogue AI i want to tear apart.

I once again ask people to stop using "art fed to the AI model" and other similar phrases

by engineeredd (December 2022)why i think it's important: this poster argues that misconceptions about how genAI models work, such as the belief that they perfectly replicate artists' styles or "steal" art, oversimplify the reality that these models learn from broad, diverse datasets instead of copying+pasting other pieces of art together. but what is equally important about this post is how the author warns that proposed solutions, like stricter regulation or style control, could lead to restrictive and harmful intellectual property frameworks that reinforce power hierarchies, missing the broader issue of how creativity evolves within a social & collective context.

quote: People think about intellectual property rights as being something universal and eternal, but in fact the field is very much in flux. The doctrine stated above can be, if not abolished, then at least circumvented. The current worldwide intellectual property architecture is actually very modern, formed in the mid 90s with the TRIPS agreement. The term itself basically didn't exist before the mid '80s. There were other regimes governing what we call IP, because it was acknowledged that it wasn't ... property.

Is AI eating all the energy? Part 1/2

by Gio (August 2024)why i think it's important: this article examines the energy consumption of AI, comparing it to other technologies like cryptocurrency, & argues that AI's energy use should be evaluated based on the value it provides, such as efficiency gains and task automation. while AI does contribute to data center energy usage, its impact remains relatively small.

quote: Watching one hour of Netflix takes 0.8 kWh. If you used that hour talking with an LLM like ChatGPT for no purpose other than entertainment, you could generate a reply every 14 seconds and still use less power than streaming. Sitting down and doing nothing but generate Stable Diffusion nonstop for an hour with a 200 W GPU takes the same 200 Wh that playing any video game on high settings would. Any normal consumer AI use is going to be equivalent to these medium-energy entertainment tasks that we basically consider to be free. I’ve turned on a streaming service just to have something playing in the background, and probably so have you. AI just doesn’t use enough power to be more wasteful than just normal tech use.

LLM Water And Energy Use

by Milja Moss (July 2024)why i think it's important: this article explores the water and energy consumption of large language models (LLMs) like GPT-3, noting that generating 100 pages of content consumes around 0.4 kWh and approximately 8.6 liters of water. Moss compares AI's resource usage to everyday activities like playing video games or running household appliances, & suggests that smaller models like GPT-3.5-Turbo are likely more efficient.

quote: When determining whether you should be using services like ChatGPT, the question then becomes: "Are 1600 responses from ChatGPT as useful to me as 2 hours of video games?"

How I Use "AI" by Nicholas Carlini (August 2024)

why i think it's important: Carlini argues that LLMs like GPT-4 are not over-hyped, highlighting their significant impact on his productivity, particularly in coding, learning new technologies, & automating repetitive tasks, making him at least 50% faster in writing code. he emphasizes that despite their limitations, LLMs are already valuable tools for specific applications, aiding in many of his daily tasks. i think this is important because an argument i hear a lot is "LLMs aren't even helpful! they can't do anything!" like Carlini, i've also found many practical uses for LLMs. one day i'd like to write a post like this, showing all the ways i use ChatGPT to help me w/ my problems.

quote: One of the most common retorts I get after showing these examples is some form of the statement “but those tasks are easy! Any computer science undergrad could have learned to do that!” And you know what? That's right. An undergrad could, with a few hours of searching around, have told me how to properly diagnose that CUDA error and which packages I could reinstall. An undergrad could, with a few hours of work, have rewritten that program in C. An undergrad could, with a hours hours of work, have studied the relevant textbooks and taught me whatever I wanted to know about that subject. Unfortunately, I don't have that magical undergrad who will drop everything and answer any question I have. But I do have the language model. And so sure; language models are not yet good enough that they can solve the interesting parts of my job as a programmer. And current models can only solve the easy tasks.